In a previous blog post on "What is the Cost of Developing, Maintaining, and Deploying a Software Architecture?", we discussed the costs involved in developing, maintaining, and deploying a software architecture.

This post will uncover how a modular software architecture can decrease these costs. Specifically, the development phase of the code since this is where a clever use of modularity can have a very direct impact.

Decreasing Software Development Costs Through Modularity

Development costs are a large part of the software costs, and spending valuable development resources wisely is essential. The aim is not necessarily to reduce the cost in absolute terms; it could just as well (or even more often) be to increase the speed or output of the development work. Some key measures to increase the efficiency of software development are to:

- Reuse code according to a module strategy.

- Faster feedback loops.

- Apply a clear strategy for outsourced code.

- Plan for hardware replacement changes or dual sourcing to avoid panic development.

Now let’s take a closer look at each of these measures. You’ll be better equipped to make informed decisions about your development resources by understanding their significance and how they can be effectively implemented.

Reuse code according to module strategy

Similar technologies are often implemented several times in different sub-systems in many software systems. This spans relatively small XML parsers to large Web UI frameworks and database technologies.

There can be many reasons why this has occurred, from silo thinking within R&D to overlapping technologies from a company merger, or just a start-up’s opportunistic way of developing, where new technologies are embraced quickly with little thought about phasing out the old.

When developing a modular software architecture, overlapping technical solutions should be uncovered and questioned. The base assumption is that one need requires only one solution.

Another aspect to analyze when working with software modularization is the speed of development of different technologies that are used, how fast is the technology evolving on the market? We need to prepare for change of technologies that are rapidly developing. For example, large language models (LLMs) are evolving very rapidly in today’s market. If a product such as a virtual assistant is using an LLM under the hood to understand natural language, we should anticipate the rapid development of this technology so that the architecture can accommodate easy upgrading of the LLM functionality in a modular way.

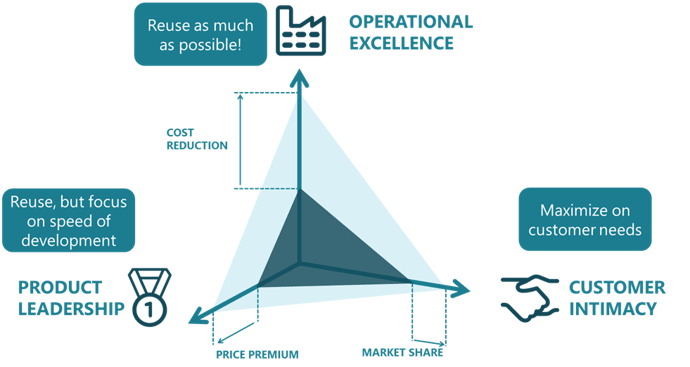

When helping companies transform their products into modular product platforms, we employ the Modular Function Deployment (MFD) process as one of the first phases for our clients in their modularization evolution. The MFD method for modularization divides modules into three groups with different strategies for code reuse:

Customer Intimacy

You must develop relevant software in the market with the proper functions to suit a maximum number of customers. This type of software allows the creation of experimental features for specific customer segments. Applying a lot of focus on code reuse here could be a mistake since it may be more important to be fast and use many of the Agile development ideas, such as cross-functional teams, and being able to innovate according to customer feedback. This is where offering many functions and features to fit differing customer needs makes sense since this could be the main reason for software to be relevant for specific customers.

Product Leadership

Technologies that require a higher rate of development, often characterized by a high code churn, should be strategically bundled into “Product Leadership” modules. While emphasizing code reuse, this approach primarily aims to expedite development and deployment.

Since this code changes a lot, applying test automation frameworks on this code might be more challenging because the change may also affect the test. This doesn’t mean that it shouldn’t be automatically tested but instead signifies that reaching high code coverage scores can be challenging and should not be expected. An alternative to applying test automation frameworks on this portion of the code, is to do manual exploratory testing of software to produce the most “bang for the buck.” Humans are still best in exploratory testing, and if there is one place where manual testing makes sense, it’s here. Maybe Generative AI will change this in the future, but for now no AI can beat an experienced software tester.

Operational Excellence

“Operational Excellence” modules, which house technologies that can be maintained on slow cadence, are a testament to efficiency. By reusing code, we can construct a robust foundation for other modules in the module system. These modules will be ideal to include in a test automation framework, ensuring fewer errors and stable interfaces, while also reducing development costs. Avoid wasting manual testing resources to these modules, as the likelihood of discovering new errors is minimal.

Figure 1. Cost Drivers for software (size of the item has no relationship with the size of the cost)

Figure 1. Cost Drivers for software (size of the item has no relationship with the size of the cost)

Structuring the code with these strategies in mind can substantially increase the efficiency and ability to meet new demands in the digital era. The essential method to make this possible is to implement solid and well-defined interfaces between the software modules, which allow for innovation in the respective “swim-lane” without cross-interface effects. The strategies applied to the module code also apply to the interfaces the modules are implementing.

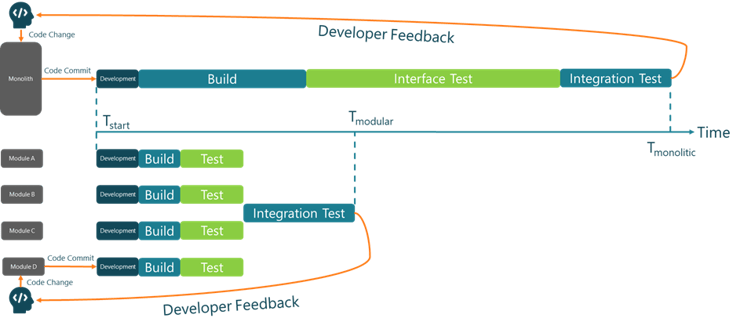

Make Feedback Loops Faster with Automated Testing

Frequent task switching is considered a significant source of lost productivity and is one of the most wasteful activities in software development. In other words, if we can limit this waste, a lot of cost savings in R&D can be achieved. To understand how to limit task switching, we need to understand why it happens.

As soon as a developer needs to wait a long time for the results of an activity, they will switch to another task for a while until they get the results from the original task. A human can only have a limited number of things in the working memory, which means as soon as tasks are switched, the working memory will be replaced with other important details for the new task at hand. Dragging out old memories from the long-term memory consumes time, and it takes a while to get back to speed on a previous task.

Adding to the time lost due to task switching, setting up a development environment for a different task can be a significant time sink. This is especially true if the development environment requires a different hardware setup, which may also be in limited supply, further delaying the developer’s progress.

Consider a scenario where a developer is working on a new feature and must wait for two weeks before receiving feedback from testers. This delay significantly hampers the developer’s ability to make timely improvements to the feature. In contrast, if the feedback had arrived within 15 minutes while the developer was still immersed in the task, the improvements could have been implemented much faster, leading to a more efficient development process.

If the feedback speed can be improved so much that the developer doesn’t need to switch tasks, their efficiency can remain high. This is one of the main reasons why many companies invest in Continuous Integration and Continuous Deployment (CI/CD) workflows and automated testing, i.e. DevOps.

However, the prerequisite for short feedback loops and successful CI/CD is a well-structured, modular architecture that allows for parallelization of tasks such as build, test, integration, and deployment. Therefore, it is likely that simply investing in CI/CD will not yield the desired results. Moreover, designing an architecture with CI/CD tooling and infrastructure in mind ensures successful usage of off-the-shelf CI/CD tooling, avoiding in-house development and, thus, reducing costs even further.

The simple example below shows how a parallel build and test pipeline dramatically decreases the time it takes for the developer to get feedback on the change that was made to the software system.

Figure 2. Decreasing the feedback loops with modular software

Figure 2. Decreasing the feedback loops with modular software

In summary, automate as much as possible but also look at how to build and test faster. You need both the method and tools with modularized software to make an impact in this area. We have data that indicates a more than 90% reduction in delivery times can be achieved, which can tremendously affect how the development department operates.

Apply a clear strategy on outsourced code

It may seem counterintuitive to outsource software development to save costs. Shouldn’t maintaining all code in-house be less costly than buying it from a software vendor? If you have invested in a modular architecture, the module strategy can support the decision to outsource.

Stable software modules, i.e., Operational Excellence modules, might not make sense to outsource since they should also be cost efficient to maintain.

Product Leadership (PL) modules, on the other hand, have a high rate of change and cost and require substantial effort to stay competitive. If the PL module isn’t a part of the core competence of your R&D, the chances that a software partner will be better suited to keep it up to date is high. It could be much more cost-efficient to outsource this development and focus your development resources on the things that are more core to your business model. Hence, outsourcing this development could be more cost-effective, allowing you to focus your resources on core business activities.

Releasing software under an open-source license can leverage community expertise to enhance and validate code.

Today, numerous companies are embracing open-source software to drive rapid innovation. Open-sourcing your software offers a similar advantage. The key difference is that you retain complete control of the copyright, empowering you to dictate the licensing terms for others to use your IP. While you might also need to invest in moderating the code base, this can also be outsourced, depending on the criticality of the code.

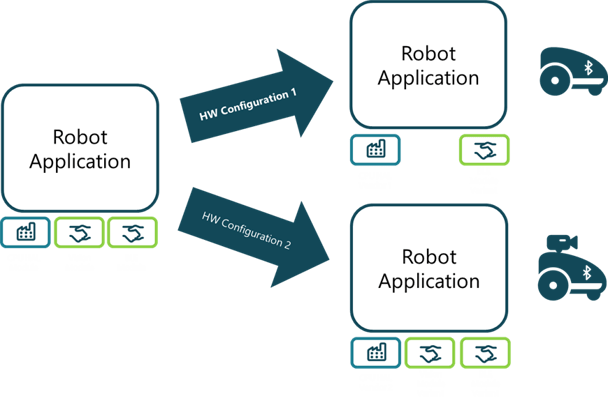

Plan for hardware changes or dual sourcing

Through a long history and knowledge of their hardware, several companies have put many resources into designing intelligent platforms around hardware and mechatronics. In the last 20 years, the difficulty of keeping pace with the advancement in electronics has increased, requiring a certain level of agility. Very few companies can orchestrate these different layers of a product's architecture in a uniform and reasonable manner.

Businesses today are often forced to change the hardware they produce, which requires software changes. This is because they urgently need to replace, for example, the chipset due to the end of production by the supplier (End-of-life) or component shortages on the market, making it temporarily impossible to source the original components. The changes in electronics can also be driven by increased needs for computing power, memory, or other features to support a new, more customer-driven software or service offering, such as over-the-air (OTA) updates.

Modularized software can ensure that you align the product planning in different layers of the product. If correctly applied, you can understand what software modules will be affected by a hardware change and which will remain unaffected. In this way, you can introduce new hardware to solve end-of-life issues without panic development in other areas of the software, which can save a lot of indirect quality cost and costly project crashes. It can also be used to introduce a second source alternative that can lower the costs for the electronics and help with supply chain resilience.

Figure 3. Understanding which software modules are affected by a hardware configuration change

You can save a lot of development costs in R&D and hardware costs in the supply chain using a modular software architecture with a clear understanding on how variance in hardware affects the software, with sustained product quality.

Optimizing Test & Verification Costs with Modularity

This blog post delves into the crucial aspect of optimizing costs in the development phase of the code. Given that testing and verification of software significantly contribute to the hidden costs in software, it’s imperative to dedicate a separate blog to this topic.

As a teaser for this blog, we will investigate how you can further reduce costs:

- finding errors early with module interface tests.

- investing in a modern and efficient test infrastructure utilizing intelligent test schemes

- releasing code in a modern DevOps fashion, continuously and with small increments, instead of big-bang annual releases.

Let's Connect!

Modular software architecture is a passion of mine and I'm happy to continue the conversation with you. Contact me directly via email below or on my LinkedIn if you'd like to discuss the topic covered or be a sounding board in general around Modularity and Software Architectures.

Thomas Enocsson

EVP and President Modular Management Asia Pacific AB

thomas.enocsson@modularmanagement.com

LinkedIn

Roger Kulläng

Senior Specialist Software Architectures

roger.kullang@modularmanagement.com

+46 70 279 85 92

LinkedIn