In our previous blog post about Software Modularization, From a Hardware-First to a Software-First Business, Roger Kulläng explains that many traditional hardware companies find themselves in a position today where their hardware products, once the essence of the business, are now merely a vehicle to carry the software products.

The amount of resources and time spent on product development is shifting from hardware to software and understanding how to best manage software architecture and software development is becoming a fundamental competence across all industrial companies. This is a trend observed over the past years in mobile phones and automotive, and it is rippling across many other businesses. Leaders must react and put software architecture on the top of the agenda to ensure a competitive position.

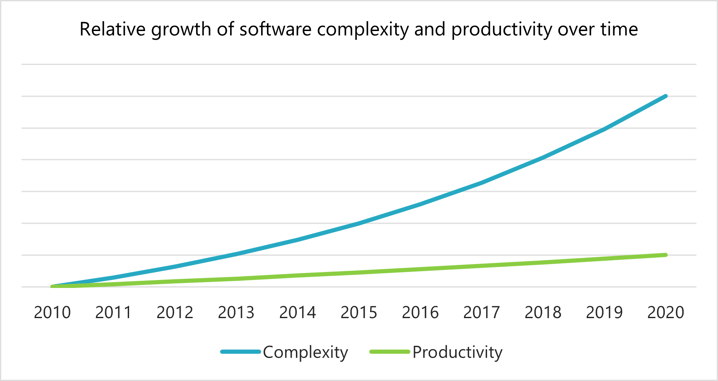

A recent McKinsey study on embedded software in the automotive industry shows that software complexity has increased by a factor of four in the last ten years. The complexity of software systems is accelerating, and in the following ten years, the rate that complexity increases will become even higher. Yearly productivity improvements are not enough to handle this increase in complexity because its rate of improvement has been only a factor of 1.5 over the past ten years. Disruptive changes in the way code is being developed and tested are needed to avoid being overwhelmed by the increased complexity of the future.

A common failure mode is pushing software development late in the product development cycle. Software is seen as a follower to hardware development and is pushed until the hardware design is known. This puts the time for testing and bug fixing on the critical path, and when the launch date is at risk, shortcuts are taken to increase the risk of quality issues reaching the market.

Agile methodologies incorporate iterations and continuous feedback to increase the efficiency of developing and delivering a software system. But, in all cases, the software must be tested and verified before launch.

In many companies, the cost and lead time of testing and bug fixing are the dominant part of the whole development project. Efforts can reach three times higher than the initial coding costs. The time it takes to release a new feature can be reduced significantly if the testing efficiency increases. Automatic testing on a module level, in addition to later-stage system tests, is a way to increase the testing efficiency. In this blog post, we will discuss how Strategic Software Modularity can support an improved way of testing and improve the speed of bug fixing. The effect? Less effort and better results, faster!

Four areas to improve for increased test efficiency:

- Find errors earlier by testing on a module level

- Reduce test effort and time by automated module testing

- Reduce effort to create new tests with stable software module interfaces

- Reduce the “blast radius” of bugs by isolation

Find Errors Earlier by Testing on Software Module Level

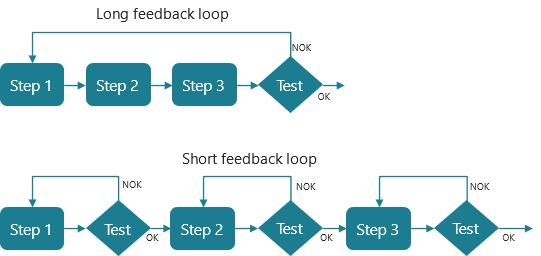

Short feedback loops are a concept that has been around for decades within hardware and electronics manufacturing. If testing can be done earlier, the feedback loop is shorter, and the error can be fixed faster.

One example is the assembly of a dashboard for a car. If the instruments and electronics are tested on the pre-assembled dashboard close to the pre-assembly station, any errors found can be fixed at that time. It is much sooner than finding errors when the complete cars are tested at the end of the final assembly line. Errors found after final assembly are harder to diagnose and often require disassembly to be fixed.

Testing should be made as close as possible to the error source, and this shortens the feedback loop

Generally, if a problem is found early, the cost to diagnose and fix it is much lower compared to a problem found later, perhaps even at the end customer. This is also true for software development.

Some of the reasons for this are:

-

- Developers know what changes were made to the system since the last successful test. Therefore, less time is needed to isolate the piece of code causing the bug and fix it.

- Developers still in the same context understand faster what could cause the bug to occur since they don’t have to spend time on switching work tasks.

- No one outside of R&D has to be involved in finding and fixing the bug, which means that communication efficiency can be higher.

- The bug has not yet been released, and it does not need to be fixed in deployed systems. It is contained to only the systems within R&D.

In addition to the cost of finding and fixing the fault, bugs that reach a customer will also generate bad publicity and will hurt the brand.

Since the costs described above are so high, many measures are taken in software development to find bugs early:

-

- Automatic syntax checking using compilers and build tools.

- Code reviews ("buddy checking" or formal reviews).

- Unit testing – code tests run on a particular software component.

- Subsystem tests – testing a combination of software components running together.

- System test – a combination of subsystems running either on the actual hardware and/or on simulated hardware.

So, what impact would the addition of a module system test have on the test equation?

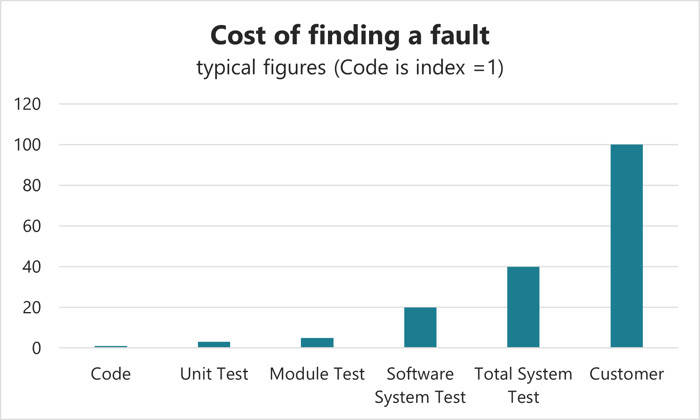

Depending on the type of product and company organization, the impact can be significant. As you can see in the chart below, it is typically at least ten times more costly to find a fault in a system test that is running on the different target hardware configurations than finding the fault in a module test.

The typical cost of finding a software fault.

Module testing is even more interesting when you recall that testing is a dominating cost in software development. The test coverage on a module test can also be higher than that of a system test. More corner cases can be tested, and the testing can be improved over time when you have a stable interface. This results in fewer bugs for the customer.

Reduce Test Effort and Time by Automated Module Testing

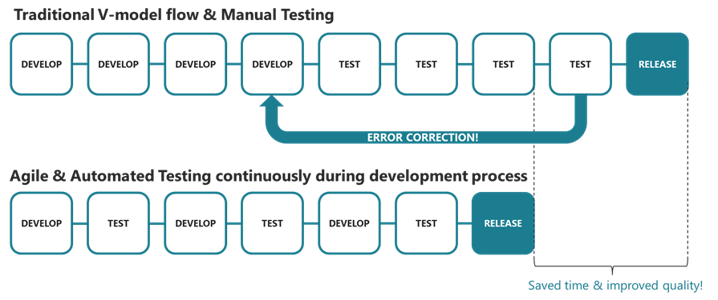

In the traditional V-model development flow, a long development phase is followed by an equally long and tedious system verification phase. All the system requirements gathered at the beginning of the project are verified to have been covered correctly.

If a bug is found late in the system verification, the feedback loop to development can be weeks or even months long. Developers lose the context. Solving the problem takes a long time, including a lot of time to re-verifying the system after the bug fix.

If an automated module test is introduced while the software is still in development, the feedback loop is decreased, and the developers are still in context to quickly fix the problem. Since the modules are continuously tested, the delay in the final verification stage is minimal.

Since the tests are automated, human labor can be used instead to do exploratory testing of new features, making time better spent and rewarding for the tester.

Comparing traditional V-model testing with Agile and automated testing

Comparing traditional V-model testing with Agile and automated testing

Reduce Test Creation Effort by Stable Software Module Interfaces

By adding a Module test step, you can find many faults early, saving significant costs in software verification.

Since there is a cost in creating and maintaining module tests, it is more efficient to do this when the module is strategy-driven and is a part of a long-term customer-driven architecture with stable interfaces. All modules need to have a well working test that catches potential faults, but for the often-changed modules, there is a need to spend some extra effort to make the testing fast and efficient. To invest and develop high-quality automatic Module testing, the Module, and its interfaces should be stable and well-controlled.

The most effective way is to use test-driven development. The design team starts with developing tests before starting the actual coding. This gives a better understanding of the new feature requirements, and the tests are run several times during the coding process, increasing the quality of both the tests and the code.

Tests and code should preferably be developed by different developers. This increases the test quality, since a single developer developing both code and test could design both according to a misunderstood requirement. Chances are, the code will conform with the faulty test.

Strategy-driven Modules

Avoid combining parts that are changed frequently due to rapid feature growth or customer variations with parts that can be kept more stable. To sort out that, we need to investigate the future and understand what new features and changes we will have to implement over time. By analyzing how the change will impact the solutions, smart software modules can be defined with change rate indications for each module.

Another important driver for software interfaces is to isolate critical functions for the product in separate modules to limit the effects of any potential new bug.

Stable Interface

Stable interface is the fundament for investing in an automated test that is fast and has a low fault slip through. To achieve long-term stable interfaces, several things should be considered:

-

- The interface needs to be placed in a smart position between functions in the architecture. This can be tricky since this position is not always obvious at first glance. Several aspects need to be considered, such as scalability, timing requirements and API strategy.

- It is important to understand possible future customer and other external requirements (such as evolving safety and security standards affecting the design) on the SW product several years ahead, e.g., what would drive a change of the interface?

- The interface needs to be placed in a smart position between functions in the architecture. This can be tricky since this position is not always obvious at first glance. Several aspects need to be considered, such as scalability, timing requirements and API strategy.

-

- A versioning strategy must be implemented, defining what changes to the interfaces are allowed and what rules apply when modifying the interface. A general rule-of-thumb is to never remove an API call from an interface since this breaks the contract of the interface and requires related modules in the system to change their call structure. You should also never modify the response data structure or change the action that the interface should perform. A semantic versioning strategy could, for instance, help here.

A stable interface could allow functionalities to be added over time without affecting backward compatibility. If you can predict future capabilities of the interface from the start, you can save in test development and keeping the test quality high over the lifetime.

Reduce the Blast Radius of Bugs by Isolation of Software Modules

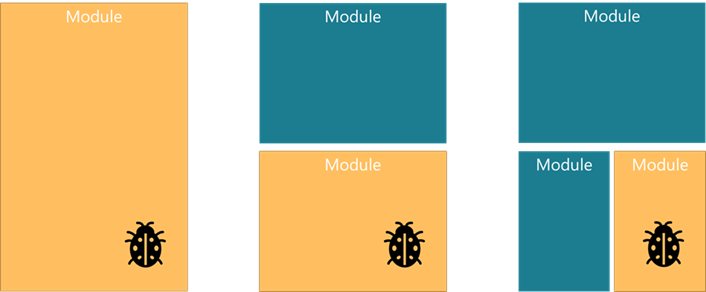

Another important aspect of increasing testing and bug fixing efficiency is a bug's “blast radius”. How much of the code do we need to change to fix a bug once it has been found? If a bug is found in a module, the entire module may need to be replaced. This means that large modules could require the replacement of a lot of code (that potentially can contain more bugs). Smaller modules are less risky to fix and are easier to test.

Let’s make a simple example of three different software systems. The first software system has a single module containing all the code. The second system has two modules, and the third has three modules. Each of the software systems implements the same functionality.

The blast radius of a software bug in a module system

If a bug surfaces in the first system, we need to re-run the test for the entire system. This test will take much more time than the module test in the second or third example, where the bug has been isolated to a much smaller module. Since the other (blue) modules are untouched, they do not require re-testing on the module level to verify that the bug is fixed. This saves significant time when re-running module tests and increases the quality and stability of the software system.

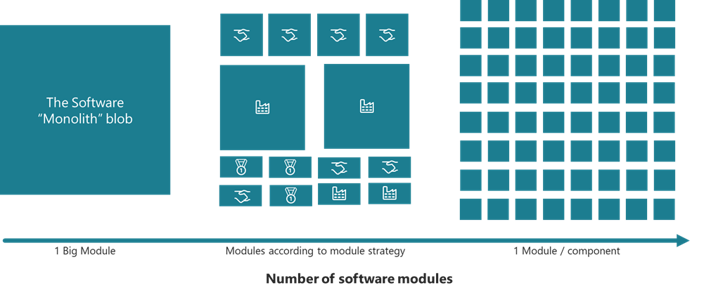

What is the Optimal Size of a Software Module?

So, if it is good to have small modules to control the “blast-radius” of bugs in the module, should the modules be as small as possible?

If we continue to make the modules smaller (one for each component at the extreme), the risk for change in the interface is much higher than a module consisting of a collection of related components that solve a function in the product. An interface on a higher functional level is more likely to be stable over time. It would be best to find a balance in the size of the software modules somewhere between the “monolith” software blob (to the left in the illustration below) and completely separated components (to the right).

Reduce the Cost of Software Testing and Increase Quality with a Modular Software Architecture

By introducing module-level tests, the issue of increased effort and time needed for software testing and verification is addressed. To avoid Module tests becoming yet another subsystem test, it is important to design software modules that are strategy driven and have stable interfaces. Test-driven development is a very efficient way of creating good tests that work very well as Module tests.

Establishing a test strategy for the modular software architecture is essential for making smart investments in different levels of automated module testing. A software module with a high change rate and a long-term stable interface justifies a higher automation investment compared to one with a lower change rate or an unstable interface.

A first step for your organization is to investigate the cost of finding bugs at the different levels of testing. This will indicate the potential for how much you can save by a shortened feedback loop. Since testing and bug-fixing are related to introducing change into the architecture, it could be defined as a cost of complexity that you can analyze.

The main challenge in defining smart and strategic software modules is defining the best interfaces. You need to isolate things that are likely to change from things that could be kept more stable. One way to understand the drivers of change is the MFD method used for developing strategic modular systems. Below you can download our 5-step guide to get started.

Let's Talk!

Software Modularity is a passion of ours and we are happy to continue the conversation with you. Contact us directly via email or on Linkedin if you'd like to discuss the topic covered or be your sounding board in general around Modularity and Strategic Product Architectures.

Roger Kulläng

Senior Specialist Software Architecture

+46 70 279 85 92

roger.kullang@modularmanagement.com

.png?width=170&name=BR%20400400%20(1).png)